Parallel Read Support

On this page

You can enable parallel reads via the enableParallelRead option.

spark.read.format("singlestore").option("enableParallelRead", "automatic").option("parallelRead.Features", "readFromAggregatorsMaterialized,readFromAggregators").option("parallelRead.repartition", "true").option("parallelRead.repartition.columns", "a, b").option("parallelRead.TableCreationTimeout", "1000").load("db.table")

enableParallelRead Modes

The enableParallelRead option can have one of the following values:

-

disabled: Disables parallel reads and performs non-parallel reads. -

automaticLite: Performs parallel reads if at least one parallel read feature specified inparallelRead.is supported.Features Otherwise performs a non-parallel read. In automaticLitemode, after push down of the outer sorting operation (for example, a nestedSELECTstatement where sorting is done in a top-levelSELECT) into SingleStore is done, a non-parallel read is used. -

automatic: Performs parallel reads if at least one parallel read feature specified inparallelRead.is supported.Features Otherwise performs a non-parallel read. In automaticmode, thesinglestore-spark-connectoris unable to push down an outer sorting operation into SingleStore.Final sorting is done at the Spark end of the operation. -

forced: Performs parallel reads if at least one parallel read feature specified inparallelRead.is supported.Features Otherwise it returns an error. In forcedmode, thesinglestore-spark-connectoris unable to push down an outer sorting operation into SingleStore.Final sorting is done at the Spark end of the operation.

Note

By default, enableParallelRead is set to automaticLite.

Parallel Read Features

The SingleStore Spark Connector supports the following parallel read features:

-

readFromAggregators -

readFromAggregatorsMaterialized -

readFromLeaves

Note

SingleStore Helios only supports the readFromAggregators and readFromAggregatorsMaterialized features.

The connector uses the first feature specified in parallelRead. which meets all the requirements.readFromAggregators feature.readFromAggregators and readFromAggregatorsMaterialized features.

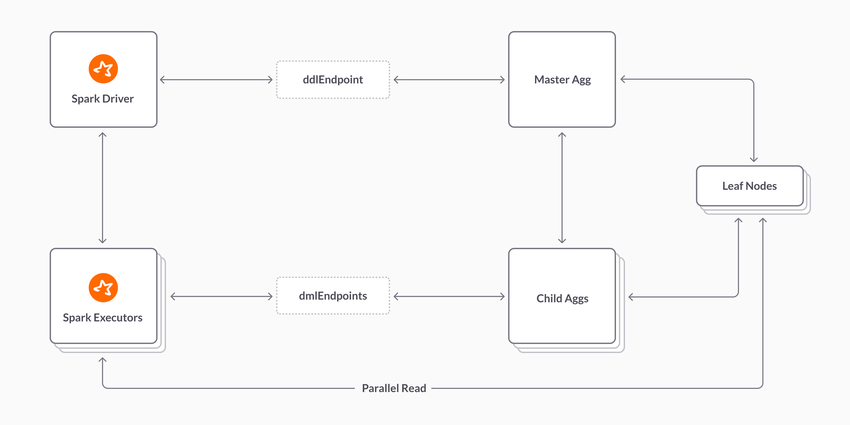

readFromAggregators

When this feature is used, the number of partitions in the resulting DataFrame is the least of the number of partitions in the SingleStore database and Spark parallelism level (i.spark. for all executors).parallelRead. option.

Use the parallelRead. option to specify a timeout for result table creation.

Requirements

To use this feature, the following requirements must be met:

-

SingleStore version 7.

5+ -

SingleStore Spark Connector version 3.

2+ -

Either the

databaseoption is set, or the database name is specified in theloadoption -

SingleStore parallel read functionality supports the generated query

readFromAggregatorsMaterialized

When using this feature, the number of partitions in the resulting DataFrame will be the same as the number of partitions in the SingleStore database.parallelRead. option.readFromAggregators feature.readFromAggregatorsMaterialized uses the MATERIALIZED option to create the result table.MATERIALIZED option may cause a query to fail if SingleStore does not have enough memory to materialize the result set.

Use the parallelRead. option to specify a timeout for materialized result table creation.

Requirements

To use this feature, the following requirements must be met:

-

SingleStore version 7.

5+ -

SingleStore Spark Connector version 3.

2+ -

Either the

databaseoption is set, or the database name is specified in theloadoption -

SingleStoreparallel read functionality supports the generated query

readFromLeaves

When this feature is used, the singlestore-spark-connector skips the transaction layer and reads directly from partitions on the leaf nodes.

This feature supports only those query-shapes that do not perform any operation on the aggregator and can be pushed down to the leaf nodes.df..parallelRead. option.

Requirements

To use this feature, the following requirements must be met:

-

Either the

databaseoption is set, or the database name is specified in theloadoption -

The username and password provided to the

singlestore-spark-connectormust be uniform across all the nodes in the cluster, because parallel reads require consistent authentication and connectible leaf nodes -

The hostnames and ports listed by

SHOW LEAVESmust be directly connectible from Spark -

The generated query can be pushed down to the leaf nodes

Parallel Read Repartitioning

You can repartition the result using parallelRead. option for the readFromAggregators and readFromAggregatorsMaterialized features to ensure that each task reads approximately the same amount of data.

Last modified: February 23, 2024