Query Performance Tools

On this page

Run ANALYZE

The ANALYZE command collects data statistics on a table to facilitate accurate query optimization.ANALYZE your tables after inserting, updating, or deleting large numbers of rows (30% of your table row count is a good rule of thumb).

EXPLAIN

Returns detailed information on how the query is compiled.

PROFILE

Provides detailed resources usage metrics about a query.

Auto Profiling

Prior to the 8.PROFILE could only be utilized on a per-query basis.SHOW PROFILE command was run to obtain the profile results.PROFILE command is explicitly written with the query.

PROFILE <statement>;SHOW PROFILE;

Now SingleStore offers two automatic profiling modes (called "auto profiling" hereafter): FULL and LITE.

FULL auto profiling collects statistics for every run of the query with an existing query plan.

FULL auto profiling may cause additional network latency between 1-5 milliseconds on mostly low latency queries.FULL auto profiling can cause a small variable overhead on the CPU.FULL auto profiling works best for queries where adding this latency won't degrade the performance of the overall workload; such as analytical queries that execute in the order of seconds not milliseconds.

LITE auto profiling collects statistics on the first run of a new query plan and when a query plan is loaded from disk to memory.FULL auto profiling.

There is negligible memory overhead for high throughput and low latency queries for using the LITE auto profiling setting.LITE auto profiling works best on transaction processing workloads with mostly inserts and updates.

The following code snippet shows how to turn on auto profiling and then how to turn on the FULL or LITE auto profiling type.enable_ engine variable.SUPER permissions, but SUPER permissions are not required to set this engine variable at the session level:

SET GLOBAL enable_auto_profile = ON;

Next, you must set the auto_FULL or LITE:

SET GLOBAL auto_profile_type = FULL | LITE;

Note

If you choose to utilize auto profiling, if you decide to turn it off (enable_), your plans will be recompiled.LITE and FULL auto profiling instead (SET GLOBAL auto_).

Auto Profiling Use Cases

Capturing Live vs Historical Profiles

The profiles of both explicitly- and auto-profiled queries are obtained using the same method.

Live profiles (also called a partial profile) are available if the query is profiled while currently executing.SHOW PROCESSLIST command.ID from the SHOW PROCESSLIST results as the process_, the live profile can be obtained by running the following command in a different session.PROCESS permission.

SHOW PROFILE [JSON] PROCESS <process_id>;

Note

Please note, when you run SHOW PROFILE PROCESS on a running query, the query's execution will be interrupted as the engine gathers the currently collected statistics to be returned to the client.SHOW PROFILE PROCESS at high frequency, as it can degrade the performance of the query.

To obtain a comprehensive profile after the session has completed the execution of the profiled query, you can simply run SHOW PROFILE [JSON] (without the PROCESS and process_ arguments) from the same session that just finished executing the query.

Historical profiles are obtained by adding a PLAN and plan_ argument to the SHOW PROFILE command.plan_ is obtained by running the SHOW PLANCACHE command.information_ view with the appropriate filters to narrow the search for the correct plan.

SHOW PROFILE [JSON] PLAN <plan_id>;

Profile statistics from previous executions are stored independently of individual users and sessions.SHOW PROFILE PLAN.SHOW PROFILE on the session that finished first becomes useful, as the profile statistics from that session's query execution remain available.

Note

Please note, when executing the same query from different clients, two plans are generated with a different plan_ in the plancache.

There is no way to manually drop historical query profiles without removing the associated query plan from the in-memory plancache.

Profiling Use Cases in Production Environments

The following use cases assume the auto profile type is set to LITE except for the last use case.

-

Investigating why a query inexplicably slows down:

Despite the absence of operators' individual execution times and network times in the initial run, the plan's profile stats still include the total execution time, row counts, and memory usage.

As a result, if the query plan starts running slower than anticipated, the user can save the initial run's stats and perform an explicit profile execution. The explicit profile will show which parts of the query are most expensive and how the query execution has changed by comparing row counts, memory usages, and network traffic. -

Investigating why a workload or a procedure inexplicably slows down:

In this situation, if the exact issue cannot be identified through workload profiling and existing monitoring tools, the

auto_can be set toprofile_ type FULLto collect basic stats from the procedures and or sessions that require profiling.Additionally, during intervals between query executions, the user can periodically send SHOW PROFILE [JSON] PLANqueries to persist individual profiles.If more detailed stats are needed for specific query plans, the explicit profile approach can be utilized. -

Checking whether a long-running query is progressing or should be terminated:

The user can capture and save a live profile of the query assuming the query is collecting statistics.

After waiting for a few minutes, the user can capture another live profile and compare the two profiles, specifically examining the row counts. If the row counts have changed, it indicates that the query is making progress, but if they haven't, it is likely that the query is stuck at some point. Or, if the row counts are only changing for one operator, then that operator may be the source of the slowness; refactoring the query or changing table and index structures may improve that. -

Determining which query plans are more likely to be resource-intensive:

In this situation, the user may anticipate high demand from their application and consequently expect increased resource usage during a specific time window.

To proactively prepare, the user can analyze the profile stats of existing plans to estimate which plans are likely to be more resource-demanding on the cluster. -

Obtaining actual profiling information from users so the support team can troubleshoot query performance issues:

This situation is especially helpful when a potentially degraded query is part of a workload and it's difficult to profile the individual queries.

Also, some query degradation presents itself intermittently. Setting auto profiling can capture the unexpected performance decline.

Profiling Use Cases in Query/Workload Tuning

-

Understanding what the impact would be if new DML queries are added to a workload:

The user can switch the

auto_toprofile_ type FULL, execute the workload, and then switch theauto_back toprofile_ type LITE.Then, the user can obtain profile statistics on the query plans that were executed as part of the workload. If specific query plans require detailed information such as individual execution times and network times, the user can explicitly profile them as well. -

Performance testing a stored procedure:

Typically, workload profiling is the primary approach for analyzing the performance of an entire workload.

Query profiling is intended to complement workload profiling, so if there is a noticeable change in the workload profiling results, query profiles of both new and existing DML queries can be captured and compared. It is important to note that the profiles of existing DML queries may undergo significant changes due to the introduction of new DML queries, which can potentially alter row counts. Leveraging auto profiles can assist in capturing these changes effectively.

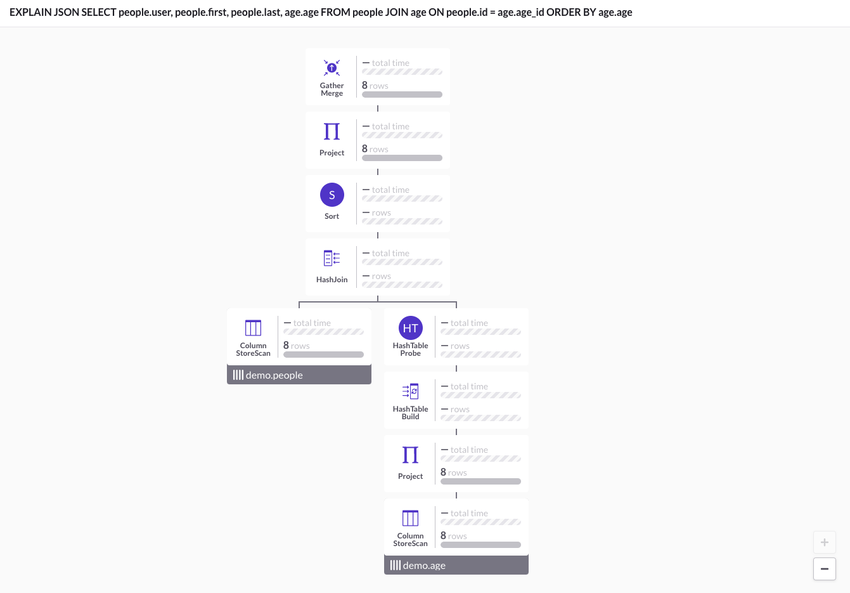

Visual Explain

Visual Explain is a feature of SingleStore that allows customers to see a query plan visualized via a graphical interface.

Query Performance Tool Results For The Same Query

Using a join between the people and age tables shown in the shard key and sort key topics, this is what we get when we submit the same query to EXPLAIN, PROFILE, and VISUAL EXPLAIN.

EXPLAIN:

EXPLAIN SELECT people.user, people.first, people.last, age.ageFROM people JOIN age ON people.id = age.age_idORDER BY age.age;

+----------------------------------------------------------------------------------------------------------------------------------------------------+

| EXPLAIN |

+----------------------------------------------------------------------------------------------------------------------------------------------------+

| GatherMerge [remote_0.age] partitions:all est_rows:8 alias:remote_0 |

| Project [people.user, people.first, people.last, age.age] est_rows:8 est_select_cost:16 |

| TopSort limit:[?] [age.age] |

| HashJoin |

| |---HashTableProbe [people.id = age.age_id] |

| | HashTableBuild alias:age |

| | Project [age_0.age, age_0.age_id] est_rows:8 |

| | ColumnStoreScan demo.age AS age_0, KEY __UNORDERED () USING CLUSTERED COLUMNSTORE table_type:sharded_columnstore est_table_rows:8 est_filtered:8 |

| ColumnStoreScan demo.people, KEY __UNORDERED () USING CLUSTERED COLUMNSTORE table_type:sharded_columnstore est_table_rows:8 est_filtered:8 |

+----------------------------------------------------------------------------------------------------------------------------------------------------+PROFILE:

PROFILE SELECT people.user, people.first, people.last, age.ageFROM people JOIN age ON people.id = age.age_idORDER BY age.age;

+-------------+-----------+------------+-----+

| user | first | last | age |

+-------------+-----------+------------+------

| cjohnson | carrie | johnson | 10 |

| dyarbrough | dennis | yarbrough | 13 |

| pquincy | penelope | quincy | 21 |

| cachebe | chioma | achebe | 29 |

| jmalik | jahid | malik | 35 |

| kharris | keisha | harris | 43 |

| tling | tai | ling | 67 |

| sstevens | samuel | stevens | 72 |

+-------------+-----------+------------+-----+

{

"plan_warnings": {

},

"execution_warnings": {

},

"mpl_path":"\/var\/lib\/memsql\/instance\/plancache\/a91\/Select_profile_age__et_al_a9111431d10dfe90ceed8b3f2b8e9c1d9706242c27af5f7ee4e97d7d3954a29a_6400bba1c33851b2",

"profile":[

{

"executor":"GatherMerge",

"keyId":4295163904,

"order":[

"remote_0.age"

],

"partitions":"all",

"est_rows":"8",

"est_rows_source":"JOIN",

"query":"SELECT STRAIGHT_JOIN `people`.`user` AS `user`, `people`.`first` AS `first`, `people`.`last` AS `last`, `age`.`age` AS `age` FROM (`demo_0`.`people` as `people` STRAIGHT_JOIN ( SELECT WITH(NO_MERGE_THIS_SELECT=1) `age_0`.`age` AS `age`, `age_0`.`age_id` AS `age_id` FROM `demo_0`.`age` as `age_0` ) AS `age`WITH (gen_min_max = TRUE)) WHERE (`people`.`id` = `age`.`age_id`) ORDER BY 4 \/*!90623 OPTION(NO_QUERY_REWRITE=1, INTERPRETER_MODE=INTERPRET_FIRST, CLIENT_FOUND_ROWS=1)*\/",

"alias":"remote_0",

"actual_total_time":{ "value":0 },

"start_time":{ "value":0 },

"end_time":{ "value":1 },

"actual_row_count":{ "value":8, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"inputs":[

{

"executor":"Project",

"keyId":196736,

"out":[

{

"alias":"",

"projection":"people.user"

},

{

"alias":"",

"projection":"people.first"

},

{

"alias":"",

"projection":"people.last"

},

{

"alias":"",

"projection":"age.age"

}

],

"est_rows":"8",

"est_rows_source":"JOIN",

"est_select_cost":"16",

"subselects":[],

"actual_row_count":{ "value":8, "avg":4.000000, "stddev":1.000000, "max":5, "maxPartition":0 },

"actual_total_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"start_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"network_traffic":{ "value":202, "avg":101.000000, "stddev":18.000000, "max":119, "maxPartition":0 },

"network_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"inputs":[

{

"executor":"Sort",

"keyId":196744,

"order":[

"age.age"

],

"actual_row_count":{ "value":8, "avg":4.000000, "stddev":1.000000, "max":5, "maxPartition":0 },

"actual_total_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"start_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"memory_usage":{ "value":785424, "avg":392712.000000, "stddev":0.000000, "max":392712, "maxPartition":0 },

"inputs":[

{

"executor":"HashJoin",

"keyId":327681,

"type":"inner",

"subselects":[],

"actual_row_count":{ "value":8, "avg":4.000000, "stddev":1.000000, "max":5, "maxPartition":0 },

"actual_total_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"start_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"inputs":[

{

"executor":"HashTableProbe",

"keyId":327825,

"condition":[

"people.id = age.age_id"

],

"inputs":[

{

"executor":"HashTableBuild",

"keyId":327824,

"alias":"age",

"actual_row_count":{ "value":8, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"actual_total_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"start_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"memory_usage":{ "value":262144, "avg":131072.000000, "stddev":0.000000, "max":131072, "maxPartition":0 },

"inputs":[

{

"executor":"Project",

"keyId":327808,

"out":[

{

"alias":"",

"projection":"age_0.age"

},

{

"alias":"",

"projection":"age_0.age_id"

}

],

"est_rows":"8",

"est_rows_source":"JOIN",

"subselects":[],

"actual_row_count":{ "value":8, "avg":4.000000, "stddev":1.000000, "max":5, "maxPartition":0 },

"actual_total_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"start_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"inputs":[

{

"executor":"ColumnStoreScan",

"keyId":4295360512,

"db":"demo",

"table":"age",

"alias":"age_0",

"index":"KEY __UNORDERED () USING CLUSTERED COLUMNSTORE",

"storage":"columnar",

"table_type":"sharded_columnstore",

"columnstore_in_memory_scan_type":"TableScan",

"columnstore_in_memory_scan_index":"KEY __UNORDERED () USING CLUSTERED COLUMNSTORE",

"est_table_rows":"8",

"est_filtered":"8",

"est_filtered_source":"DEFAULT",

"actual_row_count":{ "value":8, "avg":4.000000, "stddev":1.000000, "max":5, "maxPartition":0 },

"actual_total_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"start_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"memory_usage":{ "value":262144, "avg":131072.000000, "stddev":0.000000, "max":131072, "maxPartition":0 },

"segments_scanned":{ "value":2, "avg":1.000000, "stddev":0.000000, "max":1, "maxPartition":0 },

"segments_skipped":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"segments_fully_contained":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"segments_filter_encoded_data":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"blob_fetch_network_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"segments_in_blob_cache":{ "value":2, "avg":1.000000, "stddev":0.000000, "max":1, "maxPartition":0 },

"inputs":[]

}

]

}

]

}

]

},

{

"executor":"ColumnStoreScan",

"keyId":4295229440,

"db":"demo",

"table":"people",

"alias":"people",

"index":"KEY __UNORDERED () USING CLUSTERED COLUMNSTORE",

"storage":"columnar",

"table_type":"sharded_columnstore",

"columnstore_in_memory_scan_type":"TableScan",

"columnstore_in_memory_scan_index":"KEY __UNORDERED () USING CLUSTERED COLUMNSTORE",

"est_table_rows":"8",

"est_filtered":"8",

"est_filtered_source":"DEFAULT",

"actual_row_count":{ "value":8, "avg":4.000000, "stddev":1.000000, "max":5, "maxPartition":0 },

"actual_total_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"start_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"memory_usage":{ "value":262144, "avg":131072.000000, "stddev":0.000000, "max":131072, "maxPartition":0 },

"segments_scanned":{ "value":2, "avg":1.000000, "stddev":0.000000, "max":1, "maxPartition":0 },

"segments_skipped":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"segments_fully_contained":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"segments_filter_encoded_data":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"blob_fetch_network_time":{ "value":0, "avg":0.000000, "stddev":0.000000, "max":0, "maxPartition":0 },

"segments_in_blob_cache":{ "value":2, "avg":1.000000, "stddev":0.000000, "max":1, "maxPartition":0 },

"inputs":[]

}

]

}

]

}

]

}

]

}

],

"version":"4",

"info":{

"memsql_version":"7.5.8",

"memsql_version_hash":"12c73130aa6881ec53d57b44a654e4bada1a07c5",

"num_online_leaves":"2",

"num_online_aggs":"1",

"context_database":"demo"

},

"query_info":{

"query_text":"PROFILE SELECT people.user, people.first, people.last, age.age\n FROM people\n JOIN age ON people.id = age.age_id\n ORDER BY age.age",

"total_runtime_ms":"2",

"text_profile":"GatherMerge [remote_0.age] partitions:all est_rows:8 alias:remote_0 exec_time: 0ms start_time: 00:00:00.000 end_time: 00:00:00.001 actual_rows: 8\nProject [people.user, people.first, people.last, age.age] est_rows:8 est_select_cost:16 actual_rows: 8 exec_time: 0ms start_time: 00:00:00.000 network_traffic: 0.202000 KB network_time: 0ms\nSort [age.age] actual_rows: 8 exec_time: 0ms start_time: 00:00:00.000 memory_usage: 785.424011 KB\nHashJoin actual_rows: 8 exec_time: 0ms start_time: 00:00:00.000\n|---HashTableProbe [people.id = age.age_id]\n| HashTableBuild alias:age actual_rows: 8 exec_time: 0ms start_time: 00:00:00.000 memory_usage: 262.144012 KB\n| Project [age_0.age, age_0.age_id] est_rows:8 actual_rows: 8 exec_time: 0ms start_time: 00:00:00.000\n| ColumnStoreScan demo.age AS age_0, KEY __UNORDERED () USING CLUSTERED COLUMNSTORE table_type:sharded_columnstore est_table_rows:8 est_filtered:8 actual_rows: 8 exec_time: 0ms start_time: 00:00:00.000 memory_usage: 262.144012 KB segments_scanned: 2 segments_skipped: 0 segments_fully_contained: 0 blob_fetch_network_time: 0ms segments_in_blob_cache: 2\nColumnStoreScan demo.people, KEY __UNORDERED () USING CLUSTERED COLUMNSTORE table_type:sharded_columnstore est_table_rows:8 est_filtered:8 actual_rows: 8 exec_time: 0ms start_time: 00:00:00.000 memory_usage: 262.144012 KB segments_scanned: 2 segments_skipped: 0 segments_fully_contained: 0 blob_fetch_network_time: 0ms segments_in_blob_cache: 2\nCompile Total Time: 0ms\n",

"compile_time_stats":{

"mbc_emission":"0",

"create_mbc_context":"0",

"optimizer_query_rewrites":"0",

"optimizer_stats_analyze":"0",

"optimizer_stats_other":"0",

"optimizer_setting_up_subselect":"0",

"optimizer_distributed_optimizations":"0",

"optimizer_enumerate_temporary_tables":"0",

"optimizer_singlebox_optimizations_agg":"0",

"optimizer_stats_autostats":"0",

"generating_query_mpl":"0",

"generating_user_function_mpl":"0",

"unknown":"0",

"total":"0"

}

}

}VISUAL EXPLAIN:

SELECT people.user, people.first, people.last, age.ageFROM people JOIN age ON people.id = age.age_idORDER BY age.age;

Last modified: February 23, 2024